It wasn’t that long ago that enterprises housed their critical business applications exclusively in their own networks of servers and client PCs. Monitoring and troubleshooting performance issues, such as latency, was easy to implement.

Although network monitoring and diagnostics tools have greatly improved, the introduction of a multitude of interconnected SaaS applications and cloud-hosted services has greatly complicated typical network configuration, which can have a negative impact.

As companies outsource more and more applications and data hosting to external providers, this introduces weaker and weaker links into the network. SaaS services are generally reliable, but without a dedicated connection, they can only be as good as the Internet connection they use.

From a network management perspective, the secondary issue with externally hosted apps and services is that the IT team has less control and visibility, making it more difficult for service providers to stay within their service level agreements (SLAs).

Monitoring network traffic and troubleshooting within the relatively controlled environment of an enterprise headquarters, is manageable for most IT teams.

But for organizations based on a distributed business model, with multiple branch offices or employees in remote locations, using dedicated MPLS lines quickly leads to high costs.

When you consider that traffic from applications like Salesforce, Skype for Business, Office 365, Citrix and others, typically bypass the main office, it’s not surprising that latency is becoming more common and increasingly difficult to troubleshoot.

One of the first victims of latency is VoIP call quality, which manifests itself in unnatural delays in phone calls. However, with the explosive growth of VoIP and other UCaaS applications, this problem will continue to grow.

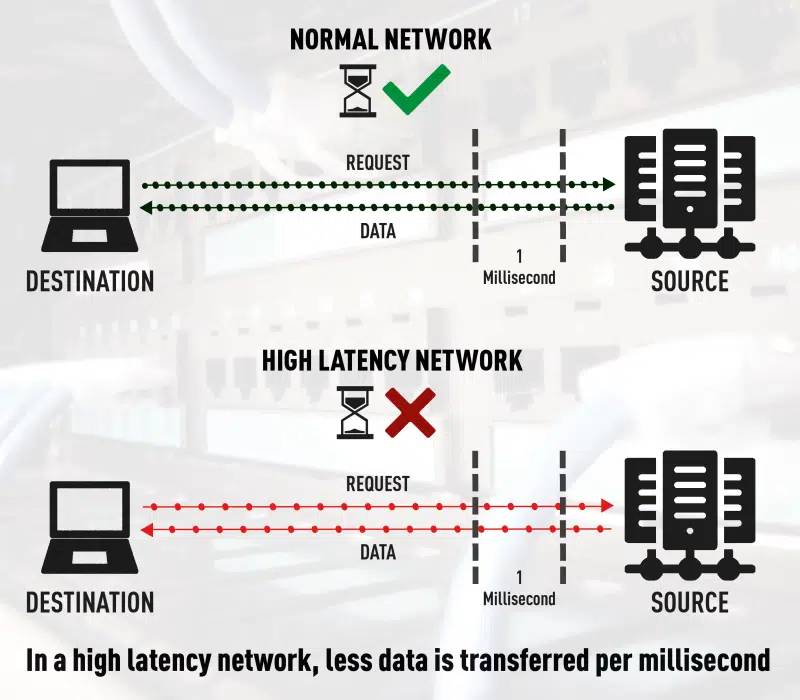

Another area where latency takes its toll is data transfer speeds. This can lead to a number of problems, especially when transferring or copying large data files or medical records from one location to another.

Latency can also be an issue for large data transactions, such as database replication, as more time is required to perform routine activities.

Impact of decentralized networks and SaaS

With so many connections to the Internet, from so many locations, it makes sense for enterprise network performance monitoring to be done out of the data center. One of the best approaches is to find tools that monitor connections at all remote sites.

Most of us use applications like Outlook, Word and Excel almost every day. If we’re using Office 365, those applications are likely configured to connect to Azure, not the enterprise data center.

If the IT team doesn’t monitor network performance directly at the branch office, they completely lose sight of the user experience (UX) at that location. You may think the network is working fine, when in fact users are frustrated because of a previously undiagnosed problem.

When traffic from SaaS providers and other cloud-based storage providers is routed to and from an enterprise, it can be negatively impacted by jitter, trace route, and sometimes compute speed.

This means that latency becomes a very serious limitation for end users and customers. Working with vendors that are close to the data needed is one way to minimize potential issues due to distance. But even in a parallel process, you may have thousands or millions of connections trying to get through at once. Although this results in a rather small delay, they build up and become larger over long distances.

Is machine learning the answer to high network latency?

It used to be that each IT team could define and monitor clear network paths between its enterprise and data center. They could control and regulate applications running on internal systems because all data was installed and hosted locally without accessing the cloud.

This level of control provided better insight into issues such as latency and allowed them to quickly diagnose and fix any problems that may arise.

Almost ten years later, the proliferation of SaaS applications and cloud services has now complicated network performance diagnostics to the point where new measures are needed.

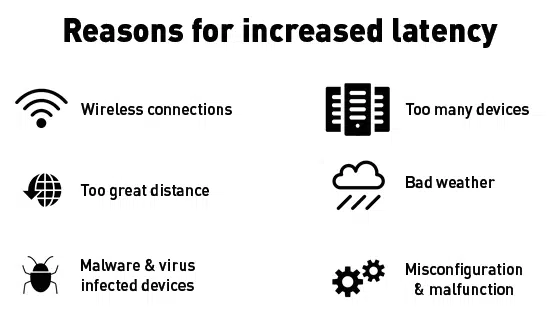

What is the cause of this trend? The simple answer is added complexity, distance and lack of visibility. When an organization transfers its data or applications to an external provider instead of hosting them locally, this effectively adds a third party into the mix of network variables.

Each of these points leads to a potential vulnerability that can impact network performance. While these services are, for the most part, quite stable and reliable, outages in one or more services do occur, even among the industry’s largest, and can impact millions of users.

The fact is that there are many variables in a network landscape that are out of the control of enterprise IT teams.

/p>One way companies try to ensure performance is to use a dedicated MPLS tunnel leading to their own corporate headquarters or data center. But this approach is expensive and most companies do not use this method for their branch offices. As a result, data from applications such as Salesforce, Slack, Office 365 and Citrix will no longer be transferred through the enterprise data center because they are no longer hosted there.

To some extent, latency can be mitigated by using traditional methods to monitor network performance, but latency is inherently unpredictable and difficult to manage.

But what about artificial intelligence? We’ve all heard examples of technologies that are making great strides using some form of machine learning. Unfortunately, however, we are not at the point where machine learning can significantly minimize latency.

We cannot predict exactly when a particular switch or router will be overloaded with traffic. The device may experience a sudden burst of data, causing a delay of only one millisecond or even ten milliseconds. The fact is, once these devices are overloaded, machine learning cannot yet help with these sudden changes to prevent queues of packets waiting to be processed.

Currently, the most effective solution is to tackle latency where it affects users the most – as close to their physical location as possible.

In the past, technicians used Netflow and/or a variety of monitoring tools in the data center, knowing full well that most of the traffic was getting to their server and then returning to their customers. With such a much larger distribution of data today, only a small portion of the data makes it to the servers, which makes monitoring your own data center far less efficient.

Rather than relying solely on such a centralized network monitoring model, IT teams should supplement their traditional tools by monitoring data connections at each remote site or branch office. Compared to current practices, this is a change in mindset, but it makes sense: if data is distributed, network monitoring must be distributed as well.

Applications like Office 365 and Citrix are good examples, as most of us use productivity and unified communications tools on a regular basis. These applications are more likely to be connected to Azure, AWS, Google or others, rather than the company’s own data center. If the IT team is not actively monitoring this branch, they will completely lose sight of the user experience at this location.

Choose a comprehensive and appropriate approach

Despite all the benefits of SaaS solutions, latency will continue to be a challenge unless enterprise IT teams rethink their approach to network management.

In short, they need to take a comprehensive, decentralized approach to network monitoring that encompasses the entire network and all its branches. Better ways to monitor the user experience and improve it as needed must also be found.

Focus on the user experience

There is no doubt that the proliferation of SaaS tools and cloud resources has been a boon for most enterprises. However, the challenge for IT teams now is to rethink the approach to network management in a decentralized network. An important issue is certainly the ability to effectively monitor that SLAs (service level agreements) are being met. Even more important, however, is the ability to ensure quality of service for all end users.

To achieve this, IT professionals need to see exactly what users are experiencing in real time.

This transition to a more proactive monitoring and troubleshooting style helps IT professionals resolve network or application bottlenecks of any kind before they become problematic for employees or customers.

Conclusion

Ergo, in order to ensure the lowest possible latencies and an associated optimal user experience, monitoring based on central measuring points is no longer sufficient in most cases.

While monitoring can still remain centralized, the measuring points must be increasingly decentralized.